INSTALLING TouchOSC From your iPad, visit the Apple App store to install TouchOSC and get it running on your iPad. DOWNLOADING THE TouchOSC EDITOR From your computer, visit the Hexler Software web site to download the TouchOSC Editor application for your Mac or PC, which allows you to upload MOTU’s custom CueMix FX layout from your. TouchOSC is a modular OSC and MIDI control surface for iPhone, iPod Touch and iPad by hexler. It supports sending and receiving Open Sound Control and MIDI messages over Wi-Fi and CoreMIDI inter-app communication and compatible hardware. The application allows. TouchOSC Editor for Mac. 14,292 downloads Updated: July 6, 2020 Freeware. Review Free Download changelog 100% CLEAN report malware. A simple yet very useful Java application that offers you the possibility to design TouchOSC layouts that can be easily synced to your iOS device. Thank you for downloading TouchOSC Editor pour Mac from our software portal. The download was scanned for viruses by our system. We also recommend you check the files before installation. The version of the Mac program you are about to download is 1.8.9. This download is absolutely FREE.

TouchOSC | Setup Traktor

TouchOSC

First we'll have to get TouchOSC connected to the computer running Traktor. In the case of Traktor we will want to use a MIDI connection. You can use any of TouchOSC's MIDI connection types.

For wireless operation this could be a CoreMIDI Network Session for Mac OS X and iOS devices, or a TouchOSC Bridge connection for any combination of OS and device. Of course any wired MIDI connection will also work using any CoreMIDI compatible MIDI interface for iOS devices.

Once you have established a MIDI connection to your computer, load the Jog-On layout from TouchOSC's Layout screen.

Traktor

- Download Jog-On.tsi

- Open Traktor, go to ‘Preferences’, then ‘Controller Manager’

- Click the large ‘Import’ button at the bottom of this screen and browse for the TSI file

- Ensure that In-Port and Out-Port on this screen are set to the MIDI interface you are using for TouchOSC's connection, if you are not sure about this setting choose ‘All ports‘ here

Controls in Detail

Note that commands shown in yellow are activated by holding down Shift. Commands shown in red are activated when in Browse Mode.

FX Units

Loop / Beatjump Controls

Loop Recorder, Volume & EQ Kills

Jogwheels & Beatmashing

Touchosc Editor For Mac Windows 10

Jogwheels & Beatmashing

Transport Controls

Sample Deck Controls

I never cease to be amazed at the versatility of Sonic Pi to produce music in such a variety of ways. The two major additions to Sonic Pi with the advent of versions 3.0.1 for the Pi and 3.1 for Mac, and Windows (with the option of self build for other platforms was the addition of interfaces to handle external midi and OSC calls. These greatly increased the scope of musical activities that Sonic Pi could be involved in, whether by enable it to interact with external synths and keyboards, or to be controlled by programs such as TouchOSC or to interact with external sensors and devices such as touch inputs, light displays or even mechanical solenoids as in the case of my Sonic Pi driven Xylophone. However one area, which at first sight seemed not to be achievable was to be able to “play” incoming midi files. Although it is easy to see and interact with midi signals which appear in the cues log, the design of Sonic Pi which uses synths where you specify the pitch AND duration of a note as it starts, is fundamentally different to the way that a stream of midi signals works by specifying that a note is to start playing at a certain pitch, but not specifying the duration of the note, instead sending a further signal at any arbitrary later time to specify that the note playing should stop. The kludgy way to deal with an incoming file on Sonic Pi has therefore been to start every note with a predetermined fade out (:release) time specified, appropriate to the tempo of the music being played. This may give rise to a rather mushy mixture of overlapping notes, or, a stream of notes with large gaps between them depending on the release time specified.

I have in the past written a program to work on one input channel, which used the technique of starting a long (say 5 second) note playing in Sonic Pi triggered by a midi_on signal and then waiting until a subsequent note_off (or note_on with zero velocity) signal was received and using the Sonic Pi kill command to stop the note. This could be achieved by arrays to keep account of the notes playing together with a reference to the play command that started it, and then retrieving that reference when the specific note pitch received a stop signal and killing the playing note.

Since then, I have developed further techniques in using Sonic Pi, particularly in using wild cards when intercepting incoming cues (both midi and OSC) which means that it is now feasible to apply this technique more widely, and, following on from a long thread I have been involved with on the in-thread.sonic-pi site, started by a user wanting to play midi input files, I resolved to see if it was possible to produce such a system. The result is the program that this article features.

Touchosc Editor For Mac Shortcut

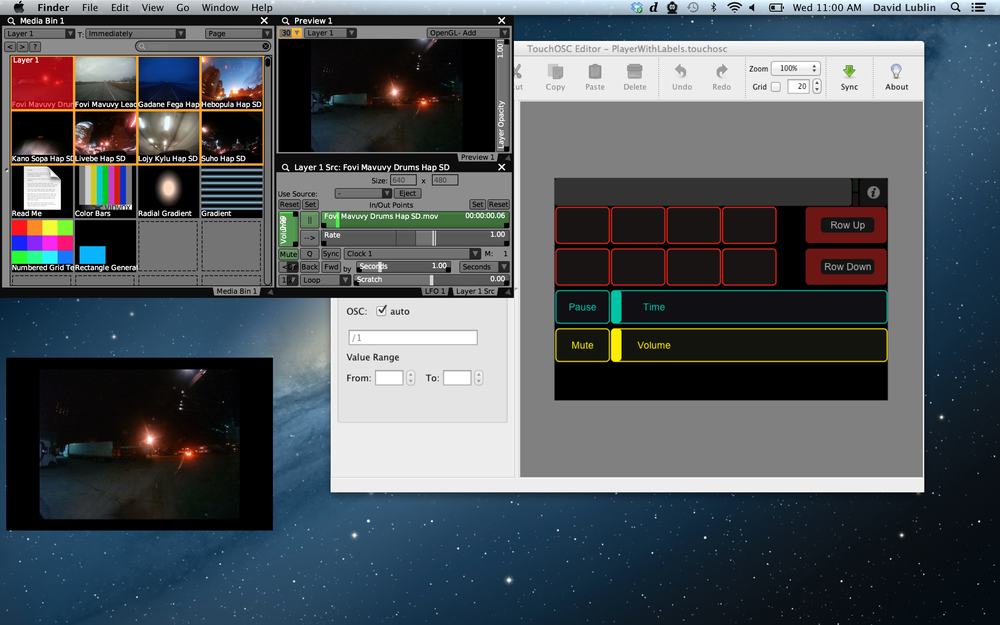

I have developed two versions of the program. They function the same way as far as parsing and playing midi files are concerned, but one is more complex because it adds the facility to choose the allocation of synths to the 16 channels, and to alter them as the filer is playing, as well as the ability to alter the overall volume as the piece plays. This is done with the addition of the TouchOSC program running on an associated tablet or phone, either mac or Android. The tablet or phone generates a screen with touch sensitive controls on it which communicate with Sonic Pi by means of OSC messages. These virtual controls make the synth and volume selections and are updated with feedback from Sonic Pi as to their present values.

TouchOSC Screen

Both programs also cater for midi channel 10 input. This is normally reserved for the control of percussion/drumset instruments. Each “note” input on this channel is used to select a percussion instrument to be played. This is achieved in Sonic Pi by means of a folder containing sample files for each different percussive instrument, drums, cymbals, wood blocks, triangle etc. and they are triggered to sound by means of the incoming note cue.

I will describe the more complex program here, but the simpler one merely excludes all the OSC stuff and uses a manually typed list of synths and a fixed volume setting.

Unfortunately I couldn’t get the code to format properly in WordPress.

Instead use this link to view the code in a new window while you read this article. Of course if you’re impatient you can just download it and the samples and the TouchOSC template and crack on with those! Download and installation details at the end of this article.

The program starts by turning off most of the logging, to ease the load on Sonic Pi, which can be considerable for a 16 channel input file. Unfortunately at present it does not seem possible to suppress the message killing sound nnn which thus appears repeatedly when a midi file is playing. For the best response you can also switch off the log window and the cue window in the Sonic Pi editor prefs. if you have a very demanding file to play.

There follow some user settings. The first two are commented out. Normally you use the program without use_real_time, but for modest files you can enable this if you really need minimum latency between the source and what you hear. In the other direction, particularly if you are running a demanding file on a Raspberry Pi you may want to increase the normal schedule ahead time. I usually leave these both commented out.

The use_osc line sets the ip address of the associated TouchOSC tablet or phone, and the port on which it is listening. You also have to set the ip address of your Sonic Pi computer and the SP listening port in the TouchOSC program on the tablet or phone you are using. The port in the TouchOSC device will be 4559 for Sonic Pi 3.0.1 or 3.1 but 4560 for SP3.2dev or later.

percpath sets the path to the folder containing the percussion samples. I will make a set of samples available for download at the end of the article.

drums is a hash table which relates the midi note value to the name of the corresponding sample. If you use different samples you will have to alter this hash list.

use_transpose may be useful if you want to shift your sound up or down by adjusting the number of semitiones from 0.

pan is a list of the default pan settings I set for each channel. These build up from the centre, with channel 10 also being set to the centre. Adjust as you wish,

synthRing holds a ring of synth names which are indexed by channel to allocate to the playing channels. It can be set manually or it can be altered by the TouchOSC interface. It MUST have at least one value in it, but can have up to 16 if you wish (The tenth entry will normally be ignored (if you have that many!)

sList is a list of synths corresponding to the labels on the TouchOSC interface. It can be altered, but should match the labels set on the touchOSC screen.

single probably shouldn’t be in this section. It is a flag which allows the TouchOSC screen to either select a single synth or to append a synth to the existing list when single is set to false.

ampAtten is the attenuation value set by the fx :level wrapper around the main playing live loop. It starts at 0.5 but can be altered here manually, or by the volume slider on the TouchOSC interface.

After the user-set values are set up some osc messages are sent to initialise the controls on the TouchOSC screen. The first one sets the Single Add switch to Single, the second sets the volume to midscale, and the remaining four initialise the four labels listing the current synth choices.

Next three lists are set up. The first two each contain 16 similar lists, one for each channel. np has 128 entries for each channel, each one corresponding to a possible midi value. If the midi note on a given channel is playing the corresponding entry will be set to 1. Otherwise it is set to 0, which is the initial value of each element when the program is run. next is nr This again has an entry for each possible note for each channel. This time it will be set with a reference to the play command for each playing note, which can subsequently be retrieved when the note_off (or note_on ,velocity 0) is received and used to kill the note.

klist is a simple 16 entry list into which the current note to be killed for a given channel can be lodged to be used by the notekill function. To allow these fairly large array structures to be set up, a sleep command is added before proceeding with the rest of the program.

parse_single_address is a function which enables all the channels to be processed with a single live loop. To achieve this, wild cards are configured in the sync command used to retrieve note_on and note_off cues from all changes in this single live_loop midi_in and then this function is used to interrogate the actual cue which matched the wild cards, and to extract the values that it had, whether note_o or note_off and which channel it was on. It does this by using the undocumented function get_event which is internal to Sonic Pi. This may change in the future, but it has been stable for some time now, and I have used it in may programs. I have explained its operation elsewhere on the in_thread site if you want more detail. Essentially it will turn a matched cue like “/midi/*/*/*/note_*” into

[“midi”, “iac_driver_spconnect”, “0”, “3”, “note_on”] from which you can extract that it was a note_on that gave the cue on channel 3. It fills in what the four * were matching.

The live_loop :getSingleAdd is used to receive an osc message for the two multi-toggle switches labelled Single and Add on the TouchOSC screen. It sets the variable single to true or false depending on whether the single switch is active or not. It uses parse_sync_address to find out which switch gave the value 1.0 (ie was switched on) in the cue. there will be two signals received when a change takes place. One switch goes on the other goes off.

The update function is used to control the text strings of the four labels at the bottom of the TouchOSC display. These are generated from the current contents of the synthRing discussed previously. Typically this might contain

(ring :piano, :saw, :pluck, :tb303, :pluck)

What the function will do is to convert this to a list of words which will end up like

[“”, “ring”, “piano”, “saw, “pluck”, “tb303”, “pluck”]

It then clears the contents of all four labels using four osc commands and after a short sleep repopulates then by selecting batches of four synth names until the list is exhausted. The imponderable bit in this function is the regular expression dd.split(/[^[[:word:]]]+/) which I must admit I did not work out but lifted from the internet. It does the required job!

The live_loop :getSynths controls the selection of synths by the TouchOSC interface.

It uses the parse_sync_address function to find out the setting of the 6 way multi-toggle switch on the TouchOSC interface which selects synths. having ascertained which switch is active, it translates the numerical number (1-6) to the range (0-5) and uses it to index the sList of synths previously referred to select a synth. If the variable single is true it replaces the current synthRing with a single entry corresponding to the chosen synth. Otherwise, iit adds the new synth to the existing ring (provided there are less than 16 there already). It does this by converting the ring to a list, adding the new synth name on the end and then converting it back to a new ring. Having altered the synthRing the live_loop calls update to display the new values on the touchOSC interface.

The live_loop :removeLast detects a press on the remove button on the TouchOSC interface, and if the synthRing has more than one entry, it removes the last one. It does this my converting the ring to an array, reversing it, dropping the first item (ie the original last item), reversing it again and finally converting it back to a ring. It then calls the update function to update the display.

the notekill function receives a channel as input. It creates a thread so that it does not hold up the live_loop :midi_in that calls it, and then retrieves the reference to the note thread to be stopped from the klist which can contain an entry for each channel. This is populated in the live_loop :midi_in It then quickly reduces the volume to 0 in 0.01 seconds (to reduces clicks) before using the kill function to stop the note via its reference.

The remainder of the program is wrapped in two effects wrappers. First fx :reverb which does what it says on the tin, giving some reverb to the notes that will play. This can be adjusted to taste by altering the parameters for room: and mix:

The second is fx :level which is used to adjust the overall volume of the notes playing by adjusting the ampAtten variable. This is initially set to 0.5, but in the TouchOSC version can be altered using the live_loop :getVol For this to work, the reference to the fx :level which is held in vol is first stored in the time state using set :vol,vol, so that it an be retrieved inside the :getVol live loop This live loop waits for an input from the vol slider on the TouchOSC interface and it then retrieves the position of the slider ( in the range 0.0->1.0) into val[0], but uses a nifty bit of Ruby code ampNew=[0.1,val[0],1.0].sort[1]#sets range 0.1=>1, which is stored in ampNew The new value is then used to control the fx :level using

control get(:vol),amp: ampNew

live_loop :midi_in is the heart of the whole program. This live_loop waits for incoming midi messages which match the wild card string “midi/*/*/*/note_*” This will match any controller on any port on any channel which is sending either note_on or note_off messages. The parse_sync_address function is then used to extract the actual channel and the actual command note_on or note_off which has been received. Each message in the note on category will have two pieces of data. The midi number of the note (0->127), and the velocity factor of the note (0->127) These are held in note and vel

If the command is note_on and vel is > 0 then a new note has been detected. The loop looks at the entry in np[ch][note] to check that the note is not already playing on that channel (if so it ignores the new note). It then marks the note as playing with np[ch][note]=1 and selects the synth for the channel with use_synth synthRing[ch] It checks whether ch is 9 (which is midi channel 10 as we are referencing the channel in range 0-15 to make array access easier) and if not starts a note playing with a sustain: value of 5 and amp: value given by vel/127.0 (range 0->1.0) A reference to the playing note thread is held in x, which is then stored in nr[ch][note]=x from where it can later be retrieved by the notekill function to kill the note off. If ch is 9 (midi channel 10) then the appropriate sample is retrieved via the percpath and drums hash and played. As for the other channels a reference is stored for the playing sample in the nr array.

The second section of the :midi_in live loop deals with stopping notes from playing, This section is reached if the detected midi message is either note_off, or if the vel setting is 0. Some players use note_off o stop a note, other use note_on with vel-0, so this catches both.

First the np array is checked to see if the relevant note is playing if np[ch][note]1 If so then the klist entry for that channel is set to the reference form the playing note in the nr array

klist[ch]=nr[ch][note] and the note playing entry is reset to 0 np[ch][note]=0 Finally the notekill function is called for the relevant channel

The program is completed by ending the two fx wrappers.

DOWNLOADING AND INSTALLATION

You can download both versions of the program and the TouchOSC template here|

The simpler version only requires the program file and the Drumset Samples. The TouchOSC version requires further software.

The template file is stored as index.xml Save the file as index.xml then compress or zip it and rename the resulting file mplayer.touchoscThis file should then be opened by the free TouchOSC editor downloadable for mac or Pc or Linux (including Raspberry Pi towards the bottom of this web page From the same web page you can download the (paid for) client for your tablet or phone for around £5 (from Mac App Store, GooglePlay or Amazon appStore).

You send the template from the Editor to your client phone or tablet using the sync function in the editor as described in the documentation on the Hexler website here

You can download a zip file of the drumset sampleshere. Unzip them and place the folder in a convenient location on your computer. Alter the path in the midifileplayer program to suit.

On a Mac the most effective midiplayer program to use is MidiPlayer X available on the Appstore for £1.99 at time of writing. A very useful and excellent program which I highly recommend. You simply load the midi-file into it using its file menu commands, and then use the transport icons to start it playing, first selecting from the popup menu on the front of the app the source port to connect to your Sonic Pi. This should be a virtual port you have set up using the Audio MIDI Setup utility on your Mac. I have a video here which explains how to do this if you are unsure. On a Raspberry Pi4 running Buster, you can start a midi-file streaming using a terminal and the built in aplaymidi command (part of alsa-utils).

With Sonic Pi running aplaymidi -l will list the available midi ports (in my case

Port Client name Port name

14:0 Midi Through Midi Through Port-0 This port should be visible in the list of midi ports in the Sonic Pi IO preference window, listed as midi_through_port-0

So with the midifileplayer program running in Sonic Pi you can start a midi-file streaming to it using aplaymidi -p 14:0 path/to/midifile.mid where midifile.mid

is the file you want to listen to. This should work on other Linux based machines running Sonic Pi as well. Unfortunately I don’t have a Windows PC with Sonic Pi at present, but you should be able to find suitable apps to work on this platform to support streaming midi-files.

If you have any queries about this software the best place to raise them is on the

in-thread sonic-pi.net forum site.